In a previous post on the topic of artificial intelligence (AI), about a year ago, I argued that apps like ChapGPT were neither artificial nor intelligent. These large language models (LLMs) were basically a rear view mirror into what is posted on the web. LLMs learn how words are related to one another and essentially develop answers based on these patterns and relationships. Well, it is really a bit more sophisticated than that.

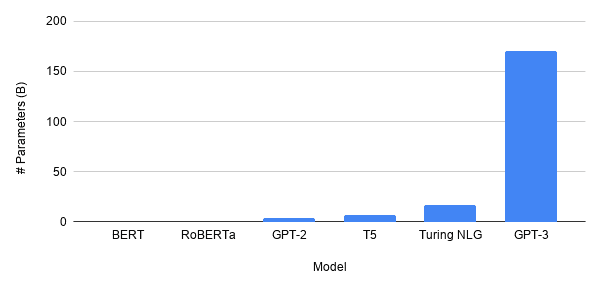

Current business interest in AI is high, with a significant 64% of businesses believing that artificial intelligence will help increase overall productivity, and many of these businesses are applying AI to improve production processes, automate tasks, and develop new products or services. The AI industry is actively pursuing the goal of artificial general intelligence (AGI), with major companies like OpenAI, Google DeepMind, and Anthropic leading the charge. AGI, also known as strong AI, refers to a form of artificial intelligence that could perform intellectual tasks that humans can, thus mimicking human intelligence without human intervention. As I mentioned, LLMs initially focused on the relationship between words, using methods like word embedding to understand context and meaning based on proximity and frequency of use. These early models often struggled with more complex tasks, as they could not fully grasp nuanced meanings and implications of language.

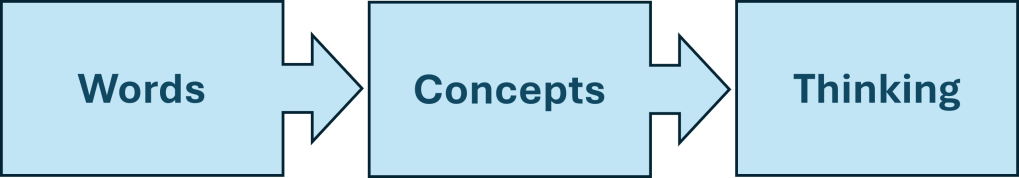

A recent article by Ryan Daws in TechForge describes a new methodology introduced by researchers from Google DeepMind and the University of Southern California. This methodology, called SELF-DISCOVER, is designed to significantly improve the reasoning capabilities of LLMs (RLLM) when tackling complex problems. This evolution can be depicted by the diagram below.

In the last couple of years, advances in machine learning and natural language processing have allowed for the development of more sophisticated models that can identify concepts, understand context, and even reason to some extent. These large language models, trained on vast amounts of text data, can generate human-like responses and answer complex queries. Yet, it is important to note that while these models are advanced, they still fall short of truly “thinking” like humans. They don’t possess consciousness or an understanding of the world. They still operate based on patterns and correlations in the data they were trained on.

Reasoning in Large Language Models (RLLM)

“Reasoning” is the process of making sense of information, drawing conclusions, and making decisions or predictions. Just like humans reason through problems or questions, developers want AI to do the same. This starts by recognizing concepts within the input data to understand their meaning, and then use this understanding to generate appropriate responses or actions. ”Reasoning in Large Language Models” (RLLM) is about developing solving strategies to understand the question, figure out the concepts involved, what information is needed to build the answer, and then generate an appropriate response. These solving strategies are increasingly based on the combined use of sophisticated atomic reasoning modules as part of overall “AI reasoners”. The framework operates in two stages:

- Stage one involves composing a coherent reasoning structure specific to the task, and leveraging a set of “atomic “reasoning modules and task examples.

- During decoding, LLMs then follow this self-discovered structure to arrive at the final solution. Decoding refers to the process of transforming the output of a model – typically sequences of numbers for language models – back into human-readable text.

Atomic Reasoning Modules

“Atomic” in “atomic reasoning modules” refers to the idea of breaking down complex problems or tasks into smaller, manageable parts – or “atoms”. Each atom represents a fundamental unit of reasoning or a single step in a process. For instance, if an AI system is trained to understand the concept of “weather”, it should be able to identify related elements like temperature, precipitation, wind speed, etc., and know how they interrelate. If asked about the weather forecast, the AI should be able to infer from the concept of “rain” that it might not be a good day for outdoor activities. A crucial AI piece of this puzzle is the critical thinking atomic reasoning module which enables AI systems to tackle more complex problems, adapt and make judgments on their own, and ultimately better mimic human reasoning. The module helps:

- Analyze information by breaking down complex data into smaller parts to understand it better,

- Evaluate evidence by assess the reliability and relevance of the information it has,

- Understand context by considering background information to make sense of the data,

- Identify biases by avoiding jumping to conclusions based on incomplete or biased information, and

- Make decisions by evaluating the pros and cons of different options to develop the best recommendations.

Results

The RLLM framework described in Daws’ article shows up to a 32% performance increase compared to traditional methods like Chain of Thought (CoT) and promises the ability to tackle challenging reasoning tasks, answer questions more accurately, and document the reasoning process considered a significant step towards achieving artificial general intelligence (AGI). Of course, it is important to remember that while these models can seem very smart, their ‘reasoning’ is based on patterns they have learned from lots of data, not on creative thinking. And just like humans, these models can come to the wrong inferences using inaccurate or biased data and make mistakes!.

In Summary

Beyond performance gains, the DeepMind and University of Southern California research suggests more advanced problem-solving capabilities aligned with human reasoning patterns. The evolution of AI continues, and while we are not at artificial general intelligence yet, we are inching closer to creating AI models that can reason and interact to provide more accurate, useful, and nuanced results.

July 10, 2018

July 10, 2018